OpenCV #012 Discrete Fourier Transform, Part 2

Highlights: In the previous post, we learnt some fundamental details about the Fourier transform and why it’s worth learning. We also showed how to transform an image into its frequency domain. I recommend you read about it here if you haven’t. In this section, we would focus on filtering in the frequency domain. We would see the effects of applying a low and high pass filter.

Almost all natural images have similar power spectrum

Tutorial Overview:

1. Low Pass Filter

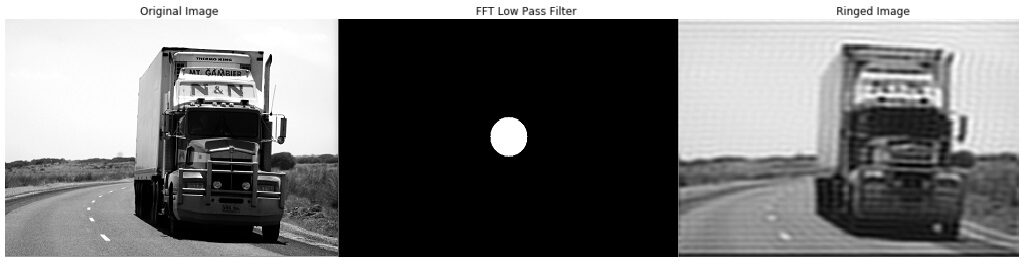

Let’s start out with some example by taking a look at the spectra of a real image on the right below.

By analysing the magnitude spectrum above, you would see this really white bright shiny thing at the middle. You may see that there is a lot of power in the middle. With that in mind, you might as well also want to pay attention as you move further away from that bright shiny thing towards the edges. It begins to fade or might as well say, it’s getting darker.

Suppose, we want to get rid of all the low-frequency contents and what is that? It is the region of the power spectrum with the white dot. What do we need to do? Firstly, we need to take the image and generate a power spectrum out of it. Secondly, once that is being done, we then need to eliminate all the things around the lower frequency. In other words, take the brighter part of the magnitude spectrum and zero out all the values around it. Surely there would be no high-frequency components at all.

For the purpose of illustration, we are going to reconstruct the image and that is performing the inverse Discrete Fourier transform. As a result of that, all we get is an ugly looking lines in the image. This is usually referred to as \(ringing \). Below we provided some code samples on how to actually do this yourself.

Python

C++

#include <opencv2/opencv.hpp>

#include <opencv2/imgproc/imgproc_c.h>

#include <iostream>

using namespace std;

using namespace cv;

cv::Mat computeDFT(Mat image);

void fftShift(Mat magI);

void lowpassFilter(const cv::Mat &dft_Filter, int distance);

int main(){

int radius=35;

cv::Mat img, complexImg, filter, filterOutput, imgOutput, planes[2];

img = imread("truck.jpg", 0);

if(img.empty())

{

return -1;

}

complexImg = computeDFT(img);

filter = complexImg.clone();

lowpassFilter(filter, radius); // create an ideal low pass filter

fftShift(complexImg); // rearrage quadrants

mulSpectrums(complexImg, filter, complexImg, 0); // multiply 2 spectrums

fftShift(complexImg); // rearrage quadrants

// compute inverse

idft(complexImg, complexImg);

split(complexImg, planes);

normalize(planes[0], imgOutput, 0, 1, CV_MINMAX);

split(filter, planes);

normalize(planes[1], filterOutput, 0, 1, CV_MINMAX);

imshow("Input image", img);

waitKey(0);

imshow("Filter", filterOutput);

waitKey(0);

imshow("Low pass filter", imgOutput);

waitKey(0);

destroyAllWindows();

return 0;

}

// create an ideal low pass filter

void lowpassFilter(const cv::Mat &dft_Filter, int distance)

{

Mat tmp = Mat(dft_Filter.rows, dft_Filter.cols, CV_32F);

Point centre = Point(dft_Filter.rows / 2, dft_Filter.cols / 2);

double radius;

for(int i = 0; i < dft_Filter.rows; i++)

{

for(int j = 0; j < dft_Filter.cols; j++)

{

radius = (double) sqrt(pow((i - centre.x), 2.0) + pow((double) (j - centre.y), 2.0));

if(radius>distance){

tmp.at<float>(i,j) = (float)0;

}else{

tmp.at<float>(i,j) = (float)1;

}

}

}

Mat toMerge[] = {tmp, tmp};

merge(toMerge, 2, dft_Filter);

}

// Compute the Discrete fourier transform

cv::Mat computeDFT(Mat image) {

Mat padded; //expand input image to optimal size

int m = getOptimalDFTSize( image.rows );

int n = getOptimalDFTSize( image.cols ); // on the border add zero values

copyMakeBorder(image, padded, 0, m - image.rows, 0, n - image.cols, BORDER_CONSTANT, Scalar::all(0));

Mat planes[] = {Mat_<float>(padded), Mat::zeros(padded.size(), CV_32F)};

Mat complex;

merge(planes, 2, complex); // Add to the expanded another plane with zeros

dft(complex, complex, DFT_COMPLEX_OUTPUT); // fourier transform

return complex;

}

void fftShift(Mat magI) {

// crop if it has an odd number of rows or columns

magI = magI(Rect(0, 0, magI.cols & -2, magI.rows & -2));

int cx = magI.cols/2;

int cy = magI.rows/2;

Mat q0(magI, Rect(0, 0, cx, cy)); // Top-Left - Create a ROI per quadrant

Mat q1(magI, Rect(cx, 0, cx, cy)); // Top-Right

Mat q2(magI, Rect(0, cy, cx, cy)); // Bottom-Left

Mat q3(magI, Rect(cx, cy, cx, cy)); // Bottom-Right

Mat tmp; // swap quadrants (Top-Left with Bottom-Right)

q0.copyTo(tmp);

q3.copyTo(q0);

tmp.copyTo(q3);

q1.copyTo(tmp); // swap quadrant (Top-Right with Bottom-Left)

q2.copyTo(q1);

tmp.copyTo(q2);

}

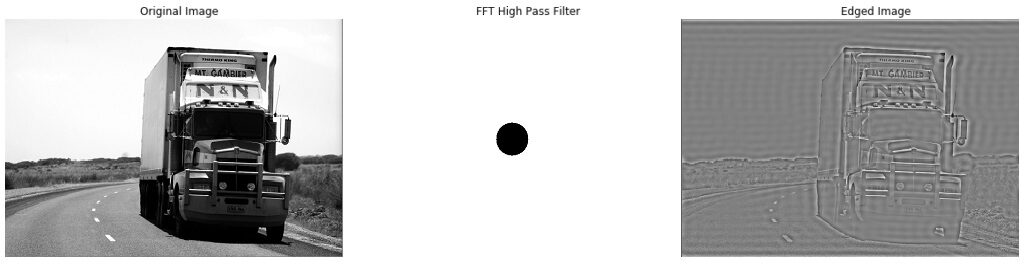

2. High Pass Filter

To spice things up a bit, we want to do something much cooler. Instead of keeping the low-frequency content, we are going to do the opposite. This means keeping the high-frequency content and remove all the low-frequency content. In simple word, zero out the middle part of the magnitude spectrum which is the white dot and the other part remains untouched.

A better question now is what are we going to see. Before we spill out the answer. Take 5 seconds to reason what exactly is going to be our result. Are you done? Okay. As soon as we remove the middle part and perform reconstruction, we are left with an edge image. This must have jugged your memory back to a post written earlier about the Sobel operator and an Image gradient. If you think of it a bit, the high-frequency components are giving us where the edges are in the image. Also here is the code used in creating these image.

Python

C++

#include <opencv2/opencv.hpp>

#include <opencv2/imgproc/imgproc_c.h>

#include <iostream>

using namespace std;

using namespace cv;

void fftShift(Mat magI);

cv::Mat computeIDFT(const cv::Mat &complexImage);

cv::Mat computeDFT(Mat image);

void highpassFilter(Mat &dft_Filter, int distance);

int main(){

int radius=30;

cv::Mat img, complexImg, filter, filterOutput, imgOutput, planes[2];

img = imread("truck.jpg", 0);

if(img.empty())

{

return -1;

}

complexImg = computeDFT(img);

filter = complexImg.clone();

highpassFilter(filter, radius); // create an ideal high pass filter

fftShift(complexImg); // rearrage quadrants

mulSpectrums(complexImg, filter, complexImg, 0); // multiply 2 spectrums

fftShift(complexImg); // rearrage quadrants

// compute inverse

idft(complexImg, complexImg);

split(complexImg, planes);

normalize(planes[0], imgOutput, 0, 1, CV_MINMAX);

split(filter, planes);

normalize(planes[1], filterOutput, 0, 1, CV_MINMAX);

imshow("Input image", img);

waitKey(0);

imshow("Filter", filterOutput);

waitKey(0);

imshow("High pass filter", imgOutput);

waitKey(0);

destroyAllWindows();

return 0;

}

// Compute the low pass highpass

void highpassFilter(Mat &dft_Filter, int distance)

{

Mat tmp = Mat(dft_Filter.rows, dft_Filter.cols, CV_32F);

Point centre = Point(dft_Filter.rows / 2, dft_Filter.cols / 2);

double radius;

for(int i = 0; i < dft_Filter.rows; i++)

{

for(int j = 0; j < dft_Filter.cols; j++)

{

radius = (double) sqrt(pow((i - centre.x), 2.0) + pow((double) (j - centre.y), 2.0));

if(radius>distance){

tmp.at<float>(i,j) = (float)1;

}else{

tmp.at<float>(i,j) = (float)0;

}

}

}

Mat toMerge[] = {tmp, tmp};

merge(toMerge, 2, dft_Filter);

}

// Compute the Discrete fourier transform

cv::Mat computeDFT(Mat image) {

Mat padded; //expand input image to optimal size

int m = getOptimalDFTSize( image.rows );

int n = getOptimalDFTSize( image.cols ); // on the border add zero values

copyMakeBorder(image, padded, 0, m - image.rows, 0, n - image.cols, BORDER_CONSTANT, Scalar::all(0));

Mat planes[] = {Mat_<float>(padded), Mat::zeros(padded.size(), CV_32F)};

Mat complex;

merge(planes, 2, complex); // Add to the expanded another plane with zeros

dft(complex, complex, DFT_COMPLEX_OUTPUT); // fourier transform

return complex;

}

// Compute the inverse of the Fourier Transform

cv::Mat computeIDFT(const cv::Mat &complexImage) {

//calculating the idft

cv::Mat inverseTransform;

cv::dft(complexImage, inverseTransform, cv::DFT_INVERSE | cv::DFT_REAL_OUTPUT);

normalize(inverseTransform, inverseTransform, 0, 1, CV_MINMAX);

return inverseTransform;

}

void fftShift(Mat magI) {

// crop if it has an odd number of rows or columns

magI = magI(Rect(0, 0, magI.cols & -2, magI.rows & -2));

int cx = magI.cols/2;

int cy = magI.rows/2;

Mat q0(magI, Rect(0, 0, cx, cy)); // Top-Left - Create a ROI per quadrant

Mat q1(magI, Rect(cx, 0, cx, cy)); // Top-Right

Mat q2(magI, Rect(0, cy, cx, cy)); // Bottom-Left

Mat q3(magI, Rect(cx, cy, cx, cy)); // Bottom-Right

Mat tmp; // swap quadrants (Top-Left with Bottom-Right)

q0.copyTo(tmp);

q3.copyTo(q0);

tmp.copyTo(q3);

q1.copyTo(tmp); // swap quadrant (Top-Right with Bottom-Left)

q2.copyTo(q1);

tmp.copyTo(q2);

}

cv::Mat magnitudeSpectrum(Mat complex) {

Mat magI;

Mat planes[] = {

Mat::zeros(complex.size(), CV_32F),

Mat::zeros(complex.size(), CV_32F)

};

split(complex, planes); // planes[0] = Re(DFT(I)), planes[1] = Im(DFT(I))

magnitude(planes[0], planes[1], magI); // sqrt(Re(DFT(I))^2 + Im(DFT(I))^2)

// switch to logarithmic scale: log(1 + magnitude)

magI += Scalar::all(1);

log(magI, magI);

fftShift(magI); // rearrage quadrants

// Transform the magnitude matrix into a viewable image (float values 0-1)

normalize(magI, magI, 0, 1, CV_MINMAX);

return magI;

}

If you consider making the edges to be much brighter, we could use a sharpening filter, called the Laplacian Operator. This sharpens the image by giving it twice the original image, minus a little bit of blurring. You may learn about some blurring techniques here. By comparing the output, you will know this image contains a much brighter intensity than the image which hasn’t been sharpened. That’s because we have changed the high frequencies.

Python

C++

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <iostream>

#include <math.h>

using namespace cv;

using namespace std;

int main() {

Mat image, gray, output, abs_output;

int kernelSize = 3;

int ddepth = CV_16S;

// Loading the image

image=imread("truck.jpg", IMREAD_COLOR);

// Take care of our edge cases to make sure there was no error in loading the file.

if(image.empty()){

cout << "Error loading image" << endl;

return -1;

}

cv::imshow("Original image", image);

cv::waitKey();

// converting the color image into grayscale

cvtColor(image, gray, COLOR_BGR2GRAY);

cv::imshow("Gray image", gray);

GaussianBlur(gray, gray, Size(3,3), 0, 0, BORDER_DEFAULT );

/// Apply Laplace function (3x3)

cv::Laplacian(gray, output, ddepth, kernelSize);

convertScaleAbs(output, abs_output);

cv::imshow("Laplacian (3,3)", abs_output );

waitKey(0);

return 0;

}

Before we round up this part, the process of filtering in the frequency domain is quite simple:

- First, transform the image data to the frequency domain which means computing, applying the fast Fourier transform or discrete Fourier transform.

- Multiply the spectrum of the image with some filtering mask.

- Finally, transform the spectrum back to the spatial domain by computing the inverse of either the discrete Fourier transform.

Summary

To conclude what we have learned so far, we have seen how preserving the brightest part of the power spectrum and zeroing out the other part can be used as a low pass filter. When we calculate the inverse Fourier transform, we get a ringing image. Also removing the brightest part of the power spectrum and preserving the other parts which are fading as it moves towards the edges and calculating the inverse Fourier Transform gives us the edges in our image. Another edge detection algorithm, you may read about is the Laplace operator.

In the next post, we will learn more about the Harris Corner Detector.