#012 B Building a Deep Neural Network from scratch in Python

In this post, we will see how to implement a deep Neural Network in Python from scratch. It isn’t something that we will do often in practice, but it is a good way to understand the inner workings of Deep Learning.

First, we will import the libraries we will use in the following code.

In the following code, we will define activation functions: sigmoid , ReLU , and tanh we will also save values that we will need for the backward propagation step, and that are Z values, and after that, we will define a function which will output \textbf{dZ} . So, to be clear, when we calculate activation of any hidden unit or of a hidden layer and also cache the value of Z^{[l]} and we have a set of functions called “backward” which outputs \textbf{dZ} values.

To calculate \textbf{dZ}^{[l]} we will use following formula, where g’^{[l]} is derivative of activation function in the layer l :

\textbf{dZ}^{[l]} = \textbf{A}^{[l]} *g’^{[l]}(\textbf{Z}^{[l]})

Function initialize_parameters_deep accepts a list of numbers of hidden units in each layer in our deep neural network. We will initialize all parameters with small random values. Dimension of a matrix \textbf{W}^{[l]} is (list[l], list[l-1]), and dimension of b is (list[l],1). In the previous post, we defined the dimensions of matrices in the following manner:

| W^{[l]} | ( n[l], n[l-1] ) |

| b^{[l]} | (n[l], 1) |

Tabel of dimensions for parameters W^{[l]} and b^{[l]}

So we need to iterate through a for loop to initialize values of parameters in every layer. We will put all values of parameters in a dictionary.

Functions that makes calculations in a single node of a layer

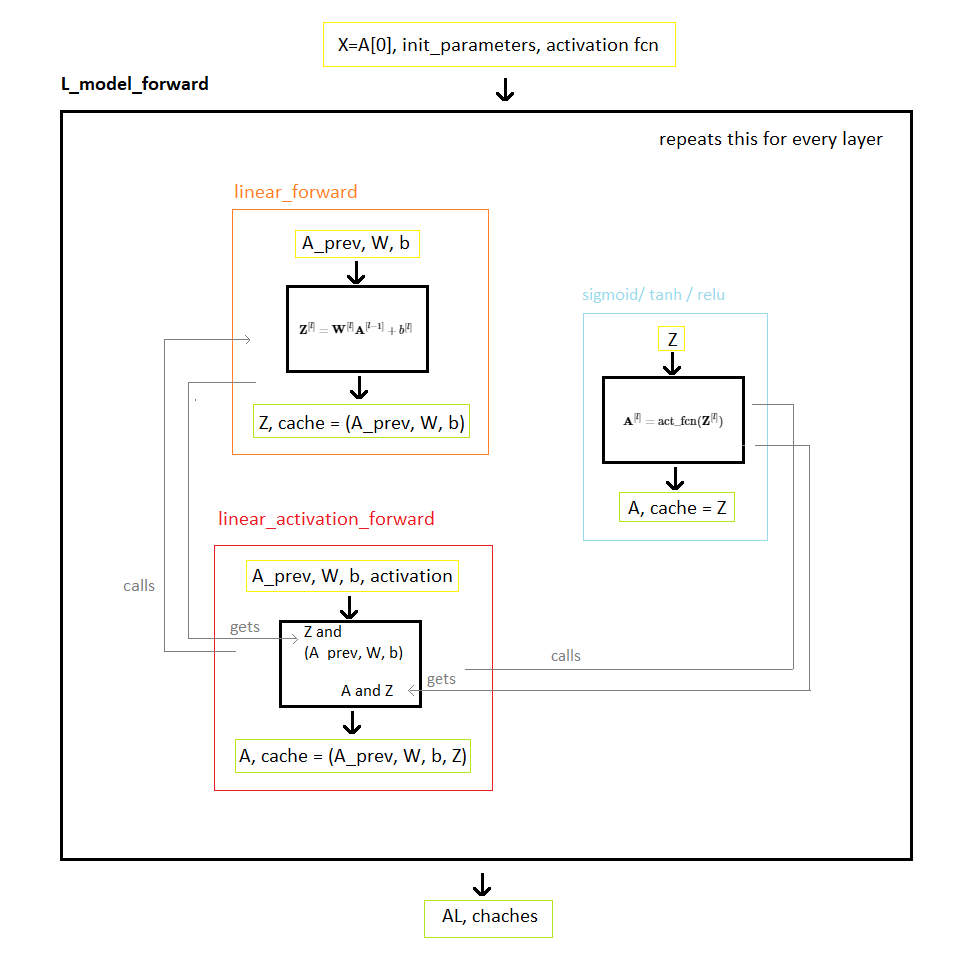

Function linear_forward(A_prev, W, b) takes activations from the previous layer and calculates Z value in the current layer. If we are calculating Z value in the first hidden layer then A_{prev} = A^{[0]} = X This function also changes all values we used in this calculation. So we will put A, W and b in a tuple cache. We can say that this function makes calculation for the first half of each neuron.

Function linear_activation_forward takes as inputs activation from the previous layers A_prev, parameter for the layer in which we are calculating Z value, and type of activation function used in that layer. This function uses (or calls) two previously defined functions linear forward and linear_activation forward. this function returns calculated activations in the current layer and caches values: activations from the previous layer A_prev, parameters W and b used in that layer calculations and value Z.

To do a complete forward pass through a Neural Network we need to implement following finction.

If you are a bit confused about what each function does look at the following picture.

L_model_forward

The following function compute_cost when algorithm outputs AL values and ground thruth labels are Y.

To make calculations in the backward pass we will use the following equations:

\textbf{dW}^{[l]} = \textbf{dZ}^{[l]} \textbf{A}^{[l-1]T}

db^{[l]} = \frac{1}{m}np.sum(\textbf{dZ}^{[l]}, axis = 1, keepdims = True)

\textbf{dA}^{[l-1]} = \textbf{W}^{[l]T} \textbf{dZ}^{[l]}

Function linear_backward takes as input dZ and cached values from the back propagation step and outputs \textbf{dA_{prev}}, \textbf{dW} and db.

To make calculations in the backward pass we will use the following equations:

The following function represents one backward proapagation through a neural network. It outputs predictions calculated in the last (or the output) layer of a neural network. This function firstly calculates \textbf{dA^{[l]}} because we are doing a binary classification so in the output layer we have the sigmoid activation function and in all the other layers we will use the same activation function so that we can make backpropagation calculations with a for loop.

Notice also that a list of cached values is equal to the number of layers in a neural network, so we cached L tuples. When we start a backpropagation step we first use the last value from the list of tuples of cached values, and because indexing in Python goes from 0 then we use (L-1)^{th} tuple, sto the last one.

To clarify how these functions work look at the following picture.

L_model_backward function

To update parameters we will use the update_parameters function.

We will define the following functions to easily make plots.

The following code makes L-layer neural network as it calls all previously defined functions.

To make predictions with learned parameters we will use the function predict which is defined in the following code.

In the next post, we will learn about computer vision.