#001A Introduction to Deep Learning

Introduction to Deep Learning

as taught by ANDREW NG, DEEP LEARNING course

LECTURE NOTES

Deep learning is a sub-field of machine learning that is rapidly rising and is driving a lot of developments that has already transformed traditional internet businesses like web search and advertising.

In the past couple of years, deep learning has gotten good from reading X-ray images, to delivering personalized education, precision agriculture, and even to self-driving cars.

Over the next decades, we will have an opportunity to build an amazing world and society that is AI powered, and maybe you will play a big role in the creation of this AI powered society.

What exactly is AI? AI is the new electricity. About 100 years ago, the electrification of our society has transformed every major industry like, transportation, manufacturing, healthcare, communication and many more.

Today, deep learning is one of the most highly sought skills and technology in the world.

What is a neural network?

A neural network is the mathematical model of the human brain and nervous system.

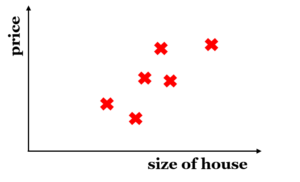

Let’s start with the house price prediction example. Suppose that you have a dataset with six houses and we know the price and the size of these houses. We want to fit a function to predict the price of these houses with respect to its size.

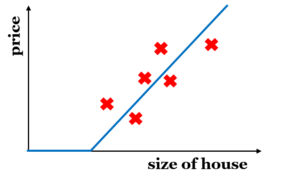

If you are familiar with linear regression, we will put a straight line through these data points. Since we know that our prices cannot be negative, we end up with a horizontal line that passes through 0.

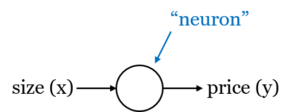

The blue line is the function for predicting the price of the house as a function of its size. You can think of this function as a very simple neural network.

The input to the neural network is the size of a house, denoted by \(x \), which goes into a node (usually a single neuron) and then outputs the predicted price, which we denote by \(y \).

If this is a neural network with a single neuron, a much larger neural network is formed by taking many of the single neurons and stacking them together.

You can think of this neuron as a single Lego brick. You form a bigger neural network by stacking together many of these Lego bricks.

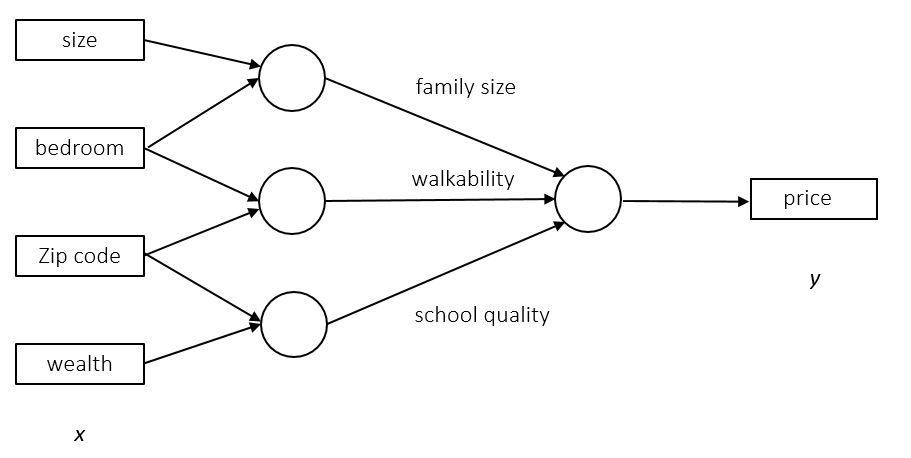

An example of a basic Neural Network with more features is illustrated in the following image.

Supervised Learning with Neural Networks

There has been a lot of hype about neural networks, and perhaps some of that hype is justified, given how well they work. Yet, it turns out that so far, almost all the economic value created by neural networks has been through one type of machine learning called, supervised learning.

Let’s see what that means and go over some examples.

In supervised learning, we have some input x, and we want to learn a function mapping to some output y. For instance, just like in the house price prediction application our input was some features of a home and our goal was to estimate the price of a home, y.

Here are some other examples where neural networks have been applied very effectively.

| Input (x) | Output (y) | Application | Neural Network Type |

| Home features | Price | Real Estate | Standard NN |

| Ad, Users info | Click on an ad? (0/1) | Online Advertising | Standard NN |

| Image | Object (1, … ,1000) | Photo tagging | CNN |

| Audio | Text transcripts | Speech recognition | RNN |

| English | Chinese | Machine translation | RNN |

| Image, Radars info | Position of other cars | Autonomous driving | Custom/Hybrid |

We might input an image and want to output an index from one to a thousand, trying to tell if this picture might be one of a thousand different image classes. This can be used for photo tagging.

The recent progress in speech recognition has also been very exciting. Now you can input an audio clip to a neural network and can have it output a text transcript.

Machine translation has also made huge strikes thanks to deep learning where now you can have a neural network input an English sentence and directly output a Chinese sentence.

In autonomous driving, you might input a picture of what’s in front of your car as well as some information from a radar and based on that your network can be trained to tell you the position of the other cars on the road. This becomes a key component in autonomous driving systems.

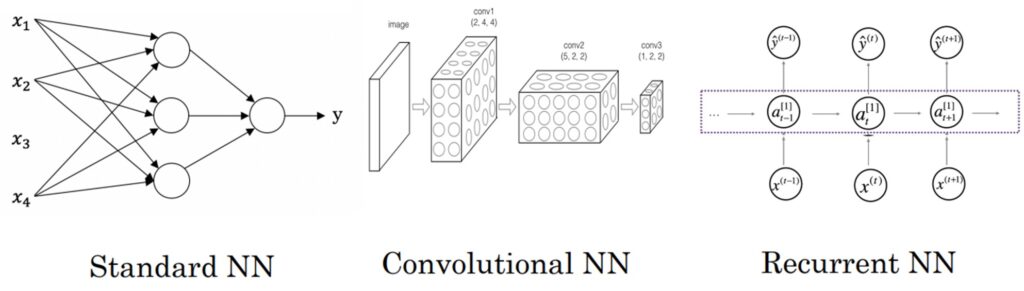

Different types of neural networks are useful for different applications.

- In the real estate application, we use a universally Standard Neural Network architecture.

- For image applications, we’ll often use Convolutional Neural Network (CNN).

- Audio is most naturally represented as a one-dimensional time series or as a one-dimensional temporal sequence. Hence, for sequence data, we often use the Recurrent Neural Network (RNN).

- Language, English and Chinese, the alphabets or the words come one at a time and language is also represented as a sequence data. RNNs are often used for these applications.

- For more complex applications, like autonomous driving, where you have an image and radar info it is possible to end up with a more custom or some more complex, hybrid neural network architecture.

Neural Networks examples

Structured and Unstructured Data

Machine learning is applied to both Structured Data and Unstructured Data.

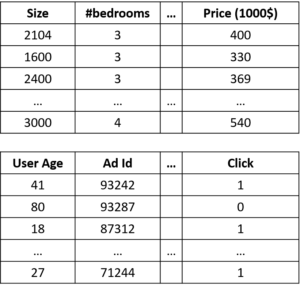

Structured Data means basically databases of data. For example, in house price prediction, you might have a database or the column that tells you the size and the number of bedrooms.

In predicting whether or not a user will click on an ad, we might have information about the user, such as the age, some information about the ad, and then labels that you’re trying to predict.

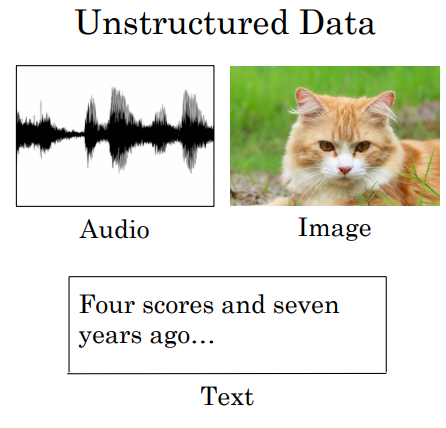

Structured data means, that each of the features, such as a size of the house, the number of bedrooms, or the age of a user, have a very well-defined meaning. In contrast, unstructured data refers to things like audio, raw audio, or images where you might want to recognize what’s in the image or text. Here, the features might be the pixel values in an image or the individual words in a piece of text.

Thanks to neural networks, computers are now much better at interpreting unstructured data as compared to just a few years ago. This creates opportunities for many new exciting applications that use speech recognition, image recognition, natural language processing of text. This was not possible to be developed even just two or three years.

Neural networks have transformed Supervised Learning and are creating tremendous economic value. It turns out that the basic technical ideas behind neural networks have mostly been around for many decades. So, why is it then that they’re only just now taking off and working so well?

More resources on the topic:

For more resources about deep learning, check these other sites.